PivotDataService Settings

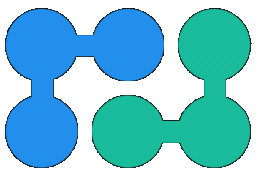

The following diagram illustrates how PivotDataService internally processes incoming API requests to generate reports:

|

Web API

Render pivot table |

→

|

Engine

Load pivot data |

→

|

In-memory cache

Reuse cached data if possible In-memory OLAP calculations |

→

|

Data source

Generate database aggregate query Load query results into in-memory OLAP cube |

| On input API expects pivot table configuration JSON. | Engine verifies that pivot table config is correct in the context of specified cube schema, determines which data is needed to build a report, performs necessary pre- and post- processing. | In-memory cache holds data for pivot table reports executed recently; engine uses cached data whenever possible. | Database query is composed and executed on-the-fly (in the real-time) and microservice works like ROLAP. |

PivotDataService uses ROLAP approach when the dataset is not imported from the data source (there is no ETL process) - instead of that BI engine generates DB queries on-the-fly to load only data that is needed for the requested report configuration (in the same way as PowerBI DirectQuery works).

This means that web API always return actual data and can be used for offering near real-time reporting expirience for end users.

However, your database should be able to execute aggregate queries fast enough (ideally in seconds). Built-in SQL connector supports capabilities that allow to fine tune

SQL queries and achieve good-enough performance even with transactional databases like SQL Server, MySQL, PostgreSQL.

For instant reports by really large datasets, it is recommended to

use specialized

analytical databases that support distributed usage scenarios (BigQuery, Redshift, ClickHouse, DuckDB/MotherDuck).

PivotDataService settings are stored in the appsettings.json file located in the folder with app binaries:

{

"Logging": { /* what to log and where */ },

"Auth": { /* web API auth options */ },

"PivotDataService": {

"License" : { /* license key */ },

"Cubes": [ /* static (hardcoded) cubes schemas */ ],

"CubeProviders": [ /* dynamic cubes providers */ ],

"ConnectionSecrets": { /* special tokens for connection string or URL secrets */ },

"Cache": { /* default data cache options */ },

"Export" : { /* export-related options */ },

"ApiUsageLimit": { /* concurrent API usage restrictions */ },

"InferSchemaRules": { /* infer schema behavior */ },

/* other options */

}

}

If your appsetting.json file is located outside folder with PivotDataService binaries its path should be provided explicitely, for example:

dotnet NReco.PivotData.Service.dll --appsettings.json=c:\config\pivotdataservice\appsettings.json

Logging options

Default "Logging" section looks like:

{

"Logging": {

"LogLevel": {

"Default": "Debug",

"System": "Information",

"Microsoft": "Error",

"NReco.PivotData.Engine": "Information" // set to "Debug" if you want to trace ALL SQL/Mongo/Elasic queries

},

"File": {

"Path":"c:/apps/logs/pivotdataservice.log" // write logs to a local file

}

}

LogLevel section refers to a standard MVC Core logging setup. Most useful entries are:

NReco.PivotData.Engine |

set it to "Debug" to get a detailed trace with ALL generated SQL (or NoSQL queries) executed by connectors. This might be useful for debugging cube configuration errors; it is not recommended to use "Debug" option in production environment as it might cause unconrolled growth of the log file. |

NReco.PivotData.Service.InMemoryCubeCacheManager |

set it to "Debug" to get detailed trace of in-memory cache HITs / invalidation reasons. |

NReco.PivotData.Service.CubeSchemaRepositorySchemaFactory |

set it to "Debug" to trace cube providers JSON load actions. |

File section configures this file logging provider. You may remove or comment out this entry to disable file logging.

Auth options

For testing/demo purposes PivotDataService API can be accessed without authentication. In production use most likely you want to secure API access in one of the following alternatives:

- Configure built-in JWT auth: pass JSON Web Token in "Authentication" HTTP header and configure "Auth" options to verify the token. JWT payload may have context values that are mapped to report parameters (like user ID or tenant ID), in this way it is possible to implement row-level security.

- use PivotDataService behind a reverse proxy (NGINX or IIS) and configure authentication on this front-end web server.

Cubes section

Cube config is a data model that determines what dimensions and measures could be used in reports and how they map to the underlying data source. Typical cube's schema looks like that:

{

"PivotDataService": {

"Cubes": [

{

"Id": "uniqueCubeId",

"Name": "Cube Title",

"SourceType": "SqlDb", /* data source type, see below */

/* source type specific section with connection details */

"Dimensions": [ /* defines a list of dimensions */ ],

"Measures": [ /* defines a list of metrics (values) */ ],

"Parameters": [ /* defines a list of cube's parameters */ ],

}

]

}

}

The following data source types are currently supported:

| Source Type | Description |

|---|---|

| SqlDb | SQL database:

|

| MongoDb | Mongo Database (2.4+), Azure Cosmos DB and other Mongo-protocol compatible DBs |

| ElasticSearch | ElasticSearch (self-hosted or cloud service) |

| XMLA | Analysis Services, icCube via HTTP(S) XMLA endpoint |

| File | Local files: CSV/TSV. Note that you can efficiently query large/remote CSV/JSON (URLs/S3) or Parquet/Iceberg with DuckDB connector. |

| Web API endpoint | Custom connector implemented as a web API endpoint that can handle PivotDataService JSON queries. |

Cubes configuration may be hardcoded in "Cubes" section, or loaded dynamically at run time from some URL or context of the HTTP request (JWT payload).

ConnectionSecrets section

In some cases database credentials should not be present in the cubes configurations. To achieve that you can define special tokens in this way:

{

"PivotDataService": {

"ConnectionSecrets": {

"db_user": "USER_VALUE" // now @db_user may be used in cube's connection string/URL

"db_user_pwd": "PASSWORD_VALUE" // now @db_user_pwd may be used in cube's connection strings/URLs

}

}

}

Cache section

This section defines global in-memory data cache settings (defaults for all cubes). Similar section in the concrete cube's config can override these default settings.

{

"PivotDataService": {

"Cache" : {

"Enabled" : true, // enabled by default

"SlidingExpirationMinutes" : 2, // max life if cached data is not used

"AbsoluteExpirationMinutes" : 10, // max life of cached data

"PivotDataCountThreshold": 300000, // do not cache aggregation results that exceed this threshold

}

}

}

Export section

In this section it is possible to configure various export-specific settings. PDF and Image outputs are generated with help of wkhtmltopdf by HTML templates.

{

"PivotDataService": {

"Export": {

"PdfPivotTemplateHtmlFile": "export/pdf-pivot.html", // path to HTML template file

"PdfTableTemplateHtmlFile": "export/pdf-table.html", // path to HTML template file

"WkhtmltopdfTimeout": 2, // timeout in minutes for PDF exports rendering

"WkhtmltopdfArgs": "", // extra command-line options for wkhtmltopdf (PDF exports)

"WkhtmltoimageArgs": "", // extra command-line options for wkhtmltoimage (Image exports)

"MaxHtmlLength": 0, // prevent PDF generation if report's HTML exceeds this threshold (in chars)

"EnableJsonPivotSubtotals": false, // include subtotals into pivot report JSON exports

"ExcelDateValueFormat": "yyyy\-m\-d", // override default date-only format for Excel exports

"ExcelDateTimeValueFormat": "yyyy\-m\-d h:mm", // override default timestamp format for Excel exports

"ExcelPivotTableCompact": false, // whether to use 'compact' layout for Excel PivotTable exports

"ExcelChart" : true, // whether to export pivot report's chart into Excel's chart

"ExcelChartMaxShiftColumn": 5, // max columns offset for Excel Chart exports

"CsvQuoteIfTrimPossible": true, // whether to quote values with leading/trailing spaces in CSV exports

}

}

}

API Usage Limit options

Reports generation might be a resource consuming, and it is possible to limit a number of concurrent executions to avoid many DB queries at once or prevent shortage of server resources where PivotDataService is hosted (RAM/CPU).

When API limits are configured all requests that match the same criteria are organized in the queue. If a request waits too much (exceeds the specified timeout) API returns HTTP/429 error.

Requests may be grouped on:

- Cube ID: useful to limit number of queries to the same data source)

- ReportConfig: useful if your report may be loaded at once by many users)

- HeaderName: you may pass a custom value in the HTTP header and use it as a criteria. For example this could be user ID or tenant ID, and in this way you can limit the number of parallel executions for the particular user or tentant.

{

"PivotDataService": {

"ApiUsageLimit" : {

"MaxConcurrentRequests":1,

"MaxWaitTimeoutMins":2,

"Criteria": {

"CubeId":true,

"ReportConfig":true,

"HeaderName":null

}

}

}

}

InferSchemaRules options

If cube's InferSchema is enabled its dimensions and measures are automatically inferred from the underlying dataset -

by analyzing columns, their types, names and values. These options allow to affect this behavior:

{

"PivotDataService": {

"InferSchemaRules" : {

"ExcludeMeasureTypes": ["Min","Max"], // exclude these types from suggested measures list

"ExcludeMeasureColumns": ["column_name"] // do not generate measures for these columns

}

}

}

Other options

{

"PivotDataService": {

"EnableResponseCompression": false, // enable in-app HTTP response compression

"EnableTableApi" : true, // enable/disable flat table API, disabled by default

"EnableSearchApi": true, // enable search queries API, disabled by default

"ExposeException": false, // include .NET exception with stacktrace into error's JSON response

"PivotTableCellsLimit": 1000000, // max cells in the pivot table; 0 by default = means 'no limit'

"AggregateRowsLimit": 1000000, // max number of rows that aggregate query can return

"FlatTableRowsLimit": 50000, // max number of rows that may be loaded at once for flat-table reports

"FlatTableNonStreamExportLimit": 50000, // max number of flat-table rows that may be exported to PDF/Excel

"QueryTimeout" : 120, // db query execution timeout in seconds, 120 by default

"CubeLookupDictionarySizeLimit": 100000, // max dictionary size for the Cube.Lookup function

"CsvLineBufferSize": 32768, // buffer size for one CSV line = max length of the CSV line that can be processed

"FormatCulture" : null, // locale (culture name) for dates/numbers formatting, for example: "en-US"

}

}